Are We Building AI Agents Inside-Out?

In the race to build powerful AI systems, are we focused on the wrong thing? New research suggests success lies not in agent skill alone, but in the architecture of their prompts and collaboration. This piece explores how to think less like a trainer and more like a system architect.

The world of AI is buzzing with the promise of multi-agent systems (MAS). We dream of creating sophisticated digital workforces—teams of specialized AI agents collaborating to solve complex problems, from writing and debugging code to navigating intricate scientific research. It’s an exciting frontier. But as we build, a crucial question arises: where does the true power of these systems come from?

I, like many others, have often focused on perfecting the logic of individual agents. But a recent paper from researchers at Google and University of Cambridge has sparked a curiosity and made me question that approach. What if we’ve been building these systems inside-out?

The paper, "Multi-Agent Design: Optimizing Agents with Better Prompts and Topologies", suggests that the real leverage isn't just in the capability of a single agent, but in the architecture of their collaboration.

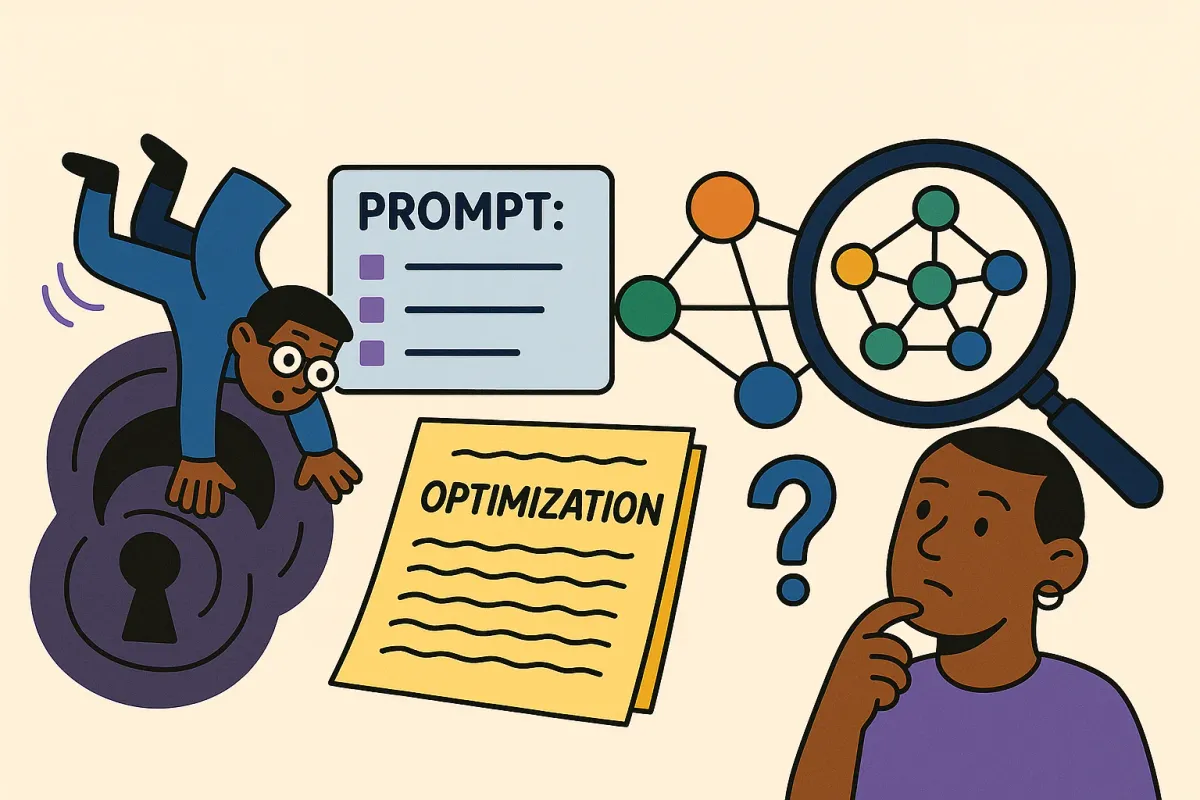

The Twin Pillars: Prompts and Topologies

After diving into the research, my main understanding is that the effectiveness of any multi-agent system rests on two foundational pillars:

Prompts: In this context, "prompts" are more than just the initial user query. They are the core instructions, the "job descriptions" for each specialized agent in the workflow. A code-generating agent, a reviewing agent, and a testing agent all need distinct, well-crafted prompts to guide their behavior.

Topologies: This refers to the workflow's structure—the "org chart" of the agent team. It defines how agents are connected, how information flows between them, and in what sequence they operate. This could be a simple sequential pipeline, a tree-like structure where tasks are broken down and then synthesized, or more complex graph-based workflows.

The paper argues, and I'm inclined to agree, that these two elements are deeply intertwined. A brilliant agent with a poorly defined role (a bad prompt) is ineffective. Likewise, a team of brilliant agents with a dysfunctional communication structure (a bad topology) will fail.

The Needle in a Haystack: The Rarity of Good Design

Here's the insight that really caught my attention: the researchers found that the space of all possible designs is vast, but the truly effective ones are incredibly rare. They state that influential topologies that lead to high task accuracy only constitute a small portion of the entire design space.

This is both daunting and empowering. It’s daunting because it means manually stumbling upon an optimal design is highly unlikely. It's empowering because it suggests that if we can develop a methodical way to search this space, we can achieve massive performance gains without necessarily needing a breakthrough in the underlying model itself. We can be smarter architects.

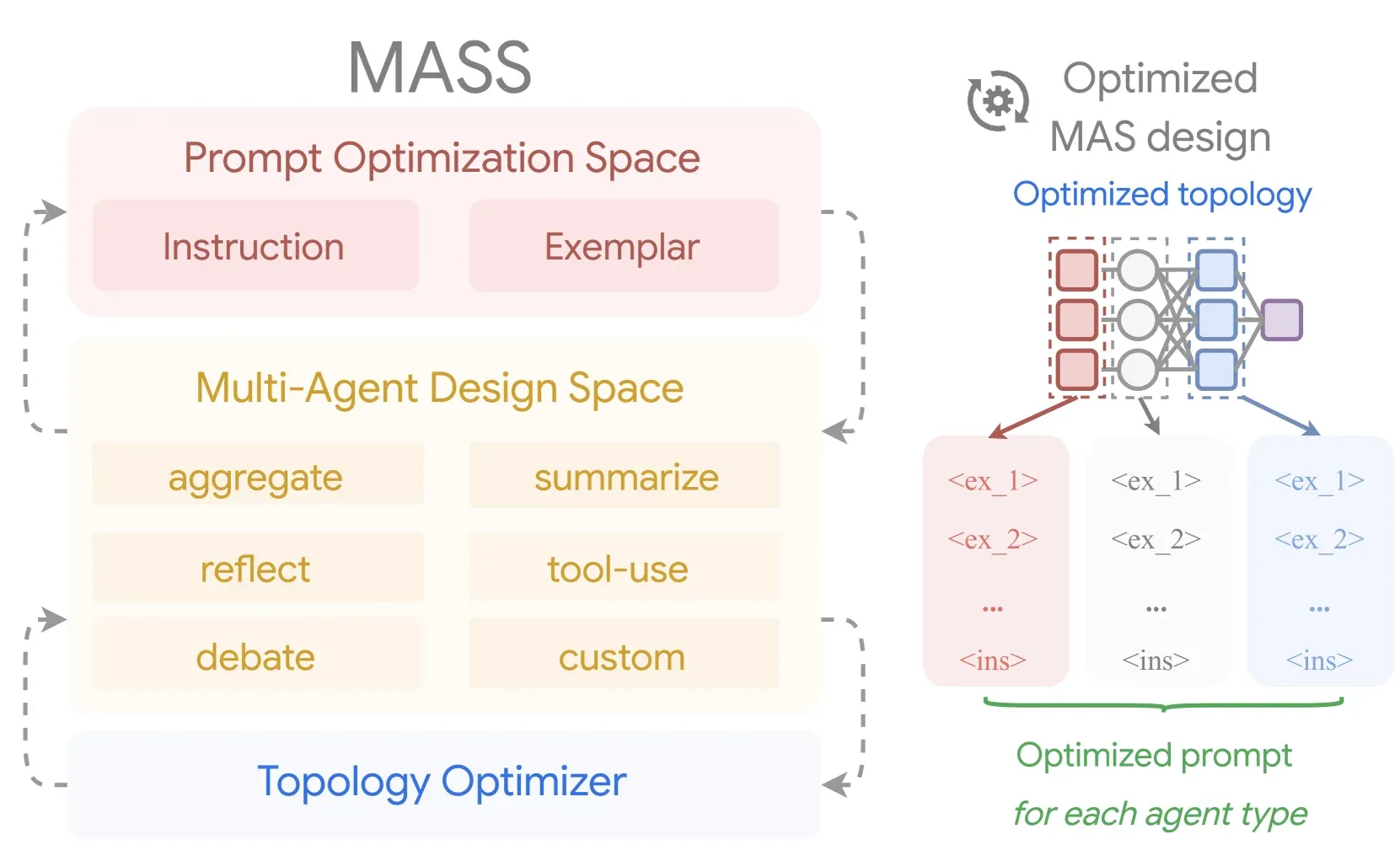

A Methodical Approach: Synthesizing Better Systems with MASS

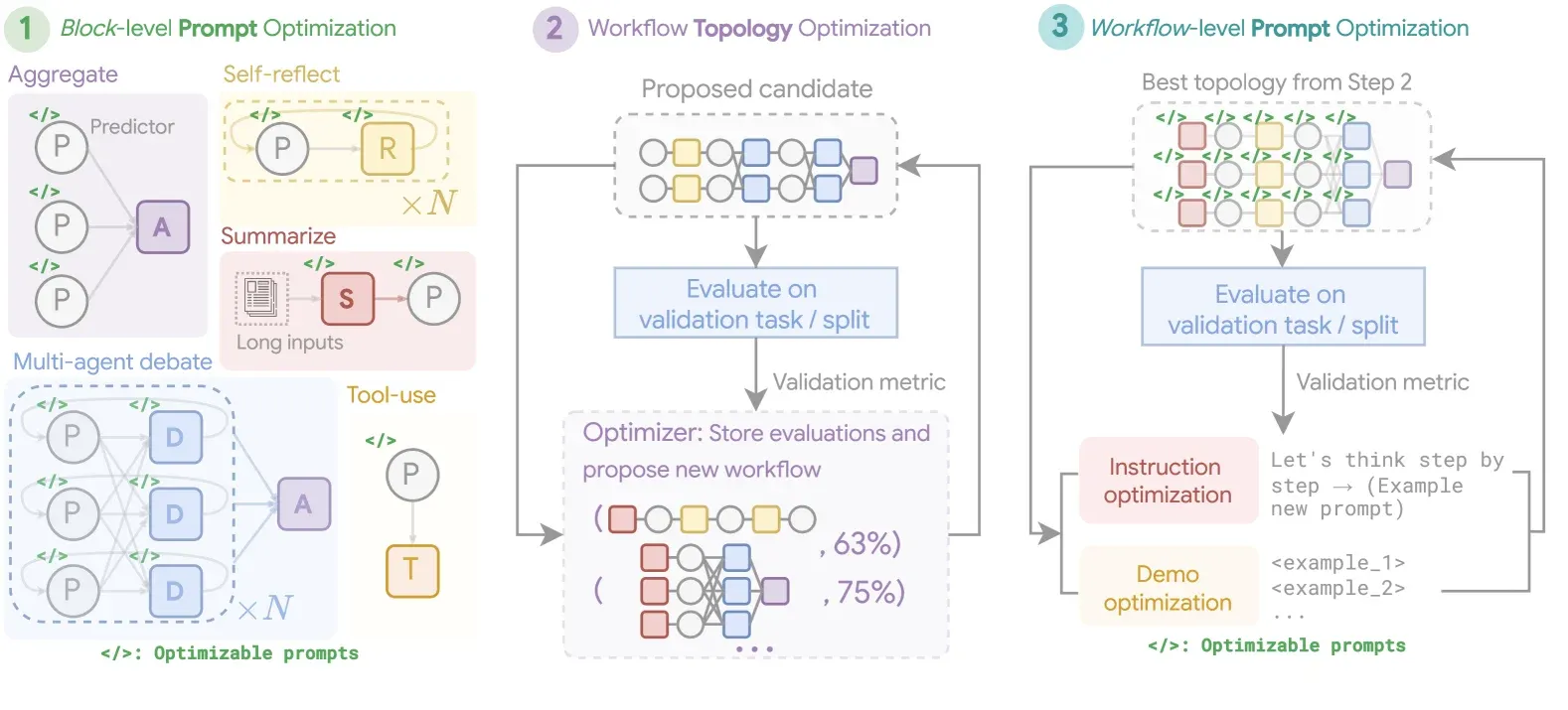

This is where the paper’s core contribution, a framework called MASS (Multi-Agent System Search), comes in. It provides an automated, three-stage process to co-design both prompts and topologies.

Here’s my distillation of how it works:

- Stage 1: Block-level Prompt Optimization. Before figuring out the team structure, MASS focuses on making each potential team member an expert at their specific task. It takes a pool of potential agents (e.g., "code generator," "reviewer") and optimizes their individual instruction prompts in isolation to maximize their standalone performance.

- Stage 2: Workflow Topology Optimization. With a set of highly capable individual agents, the framework then tackles the million-dollar question: what is the most effective way for them to collaborate? It searches through various topologies—connecting the agents in different sequences and structures—to find the workflow that yields the best results for the overall task.

- Stage 3: Workflow-level Prompt Optimization. Finally, with the optimal topology locked in, MASS performs one last round of prompt optimization. This time, however, it refines the prompts within the context of the entire workflow. An agent’s instructions might need slight adjustments now that its inputs and outputs are connected to other specific agents. It’s the final polish, ensuring the hand-offs are seamless and the team communication is perfect.

This iterative loop, where each stage's output informs the next, is what makes the process so powerful. The results speak for themselves: on challenging benchmarks like coding and scientific question-answering, the systems synthesized by MASS significantly outperformed both manually designed systems and those generated by other automated methods.

Deeper Lessons in Agent Architecture

For me, the most valuable part of this research is the set of generalizable design principles we can extract from its findings:

- More Agents Aren't Always Better: The study showed that performance can saturate or even degrade after adding a certain number of agents. Adding complexity doesn't guarantee better results.

- Simple Can Be Powerful: Surprisingly, complex tree-structured topologies didn't always win. With well-optimized prompts, a simple sequential pipeline often performed just as well or even better. A small, focused team with clear instructions can outperform a large, complex one.

- Prompt Quality is Paramount: The research underscores that investing in high-quality prompts can elevate the performance of even the simplest topologies.

This has me thinking: are we, as developers and architects, spending enough time on the architecture of collaboration, or are we too focused on the agents in isolation? It feels like we have an opportunity to move from being just agent trainers to becoming true system architects.

I'm curious to hear how others are approaching the co-design of prompts and agent interaction. Does this resonate with your experiences?

For anyone interested in the full details, I highly recommend reading the paper: "Multi-Agent Design: Optimizing Agents with Better Prompts and Topologies" available at https://arxiv.org/abs/2502.02533.