Automating LLMS-TXT Context for the Lazy Engineer

TL;DR Programmer was lazy to manually upload text files to AI coding agents. Found an MCP server that helps, but was too lazy to keep updating the command arguments. Spent a couple of hours writing a daemon that reloads server with required changes on config or directory changes.

As a software engineer who frequently uses AI coding assistants like an expert-level rubber duck, I found myself stuck in a frustratingly repetitive loop. The dance of downloading llms.txt files, meticulously attaching them to my code agent sessions, and ensuring they had the right context was becoming a real drag on my productivity. It was a small but constant friction point.

From MCPDoc Project: llms.txt is a website index for LLMs, providing background information, guidance, and links to detailed markdown files. IDEs like Cursor and Windsurf or apps like Claude Code/Desktop can use llms.txt to retrieve context for tasks.

mcpdoc-daemon is available on GitHub.The Problem: Context is King, but Managing it is a Chore

Modern LLMs are powerful, but their power is amplified tenfold when they have the right context. For developers, this often means feeding them API documentation, library source code, or internal project docs. The llms.txt files allow for an easy way for code agents to receive context. But every time I switched projects or needed to add context about a new dependency, it was back to the download-and-attach grind. And not to mention the limits around the context windows - you need to be selective in what you attach when.

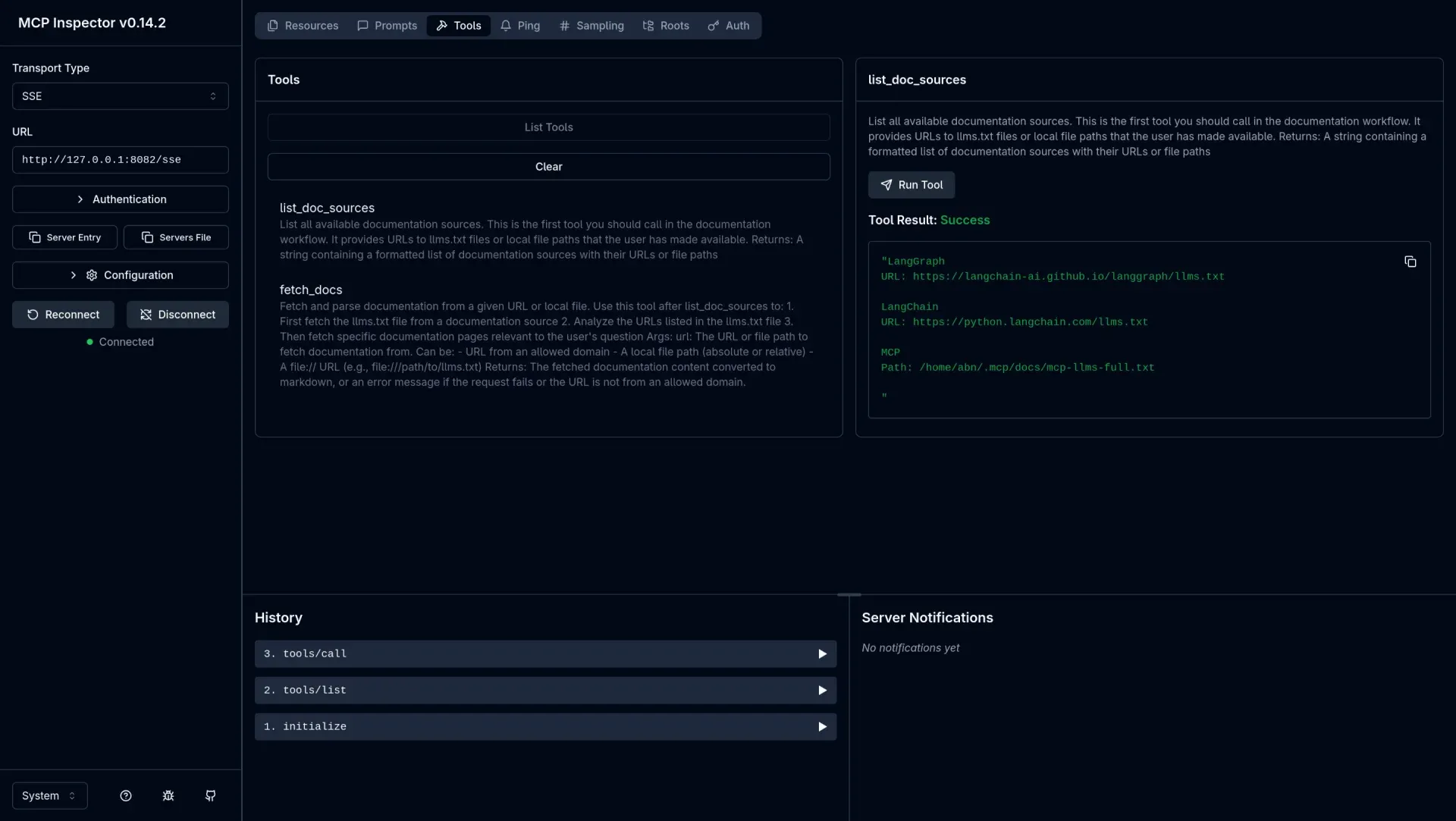

llms.txt fles are readily available over the network and some might even have to be manually generated from documentation. Having the ability to use local files are great as it enables offline working modes.My search for a better way led me to the excellent MCP LLMS-TXT Documentation Server (mcpdoc) project. This tool was a game-changer. It allowed me to expose my llms.txt files using the Model Context Protocol (MCP). My code assistants could now subscribe to this MCP server, and I no longer had to manually attach files. Plus, it left the decision of what documentation to use and when up to the a It was a huge step forward!

uvx --from mcpdoc mcpdoc \

--urls "LangGraph:https://langchain-ai.github.io/langgraph/llms.txt" \

"LangChain:https://python.langchain.com/llms.txt" \

"MCP:/home/abn/.mcp/docs/mcp-llms-full.txt" \

--allowed-domains "*"

--transport sse \

--port 8082 \

--host 127.0.0.1Example command to run an MCPDoc server with both remote and local llms.txt files.

But then, my inherent laziness as an engineer kicked in. While mcpdoc solved the attachment problem, it still required manual intervention. Every time I added a new llms.txt file for a new service or updated an existing one, I had to stop the server, update the command-line arguments, and restart it.

It worked, but it wasn't seamless. I thought to myself, "There has to be a way to automate this. How hard could it be to just watch a directory for changes and reload the server automatically?" - Famous Last Words ™.

The Solution: mcpdoc-daemon

That's why I cobbled together mcpdoc-daemon. It's a simple, lightweight daemon that wraps the mcpdoc CLI and adds the automation layer I was missing.

The core idea is straightforward:

- Point the daemon at a configuration directory.

- The daemon scans the directory for a

config.yamland any*.llms.txtfiles. - It automatically builds the correct

mcpdoccommand and starts the server as a background process. - Crucially, it watches the directory for any file changes – modifications, creations, or deletions.

- When a change is detected, the daemon gracefully restarts the

mcpdocserver with the updated configuration.

This means I can now manage my entire LLM context by simply adding, removing, or editing text files in a folder. The changes are picked up and served automatically. I can add context from local files or from external URLs (via the config.yaml file), and the daemon handles the rest.

How to Get Started

I've made it as easy as possible to get mcpdoc-daemon up and running. Here’s a quick tutorial.

Configuration

Next, create a dedicated directory for your configurations. A good practice is to use the XDG standard location if you are on Linux or anothe Unix-like operating system:

mkdir -p ~/.config/mcpdoc

This is the folder we'll get the daemon to monitor. Now, let's add some remote and local llms.txt files.

1. Use the confg.yaml file for remote URL(s)

config.yaml) should be placed in the configuration directory we will use. The daemon will also pick up config.json if a YAML file is not available.This is a config file used by mcpdoc server. Here is a sample configuration that specifies remote location for LangGraph Python and Model Context Protocol llms.txt.

# Each entry must have a llms_txt URL and optionally a name

- name: LangGraph Python

llms_txt: https://langchain-ai.github.io/langgraph/llms.txt

- name: Model Context Protocol

llms_txt: https://modelcontextprotocol.io/llms-full.txt2. Place any local files in the config directory

Create files ending in .llms.txt. The daemon uses the filename (without the .llms suffix) as the service name. For example, let's create a context file for ElectricSQL at ~/.config/mcpdoc/ElectricSQL.llms.txt. This can be configured as a remote url, however for this example, we will use it as a local file.

curl -sLo \

~/.config/mcpdoc/ElectricSQL.llms.txt \

https://electric-sql.com/llms.txtdjango.llms.txt, myproject.llms.txt, etc.Running the Daemon

You can now run the daemon from your terminal. You can use pipx or uvx for the Python package, and podman or docker for the container image. See examples below.

pipx install mcpdoc-daemon

mcpdoc-daemon --config-dir ~/.config/mcpdocInstall and use via pipx

uvx mcpdoc-daemon --config-dir ~/.config/mcpdocInstall and use via uvx

podman run --rm -it \

-p 8080:8080 \

-v ~/.config/mcpdoc:/config:z,ro \

ghcr.io/abn/mcpdoc-daemon:latestUse the container

It will start the mcpdoc server and begin watching your directory for changes. The mcpdoc server will be available at http://localhost:8080 by default. You can change the host and port configuration using environment variables or command-line options.

For the Truly Lazy: Run as a Systemd Service

Manually starting the daemon is fine, but running it as a background service is the ultimate "set it and forget it" solution. If you're on Linux, you can refer to the systemd.md file in the repository for instructions and sample user service.

Conclusion

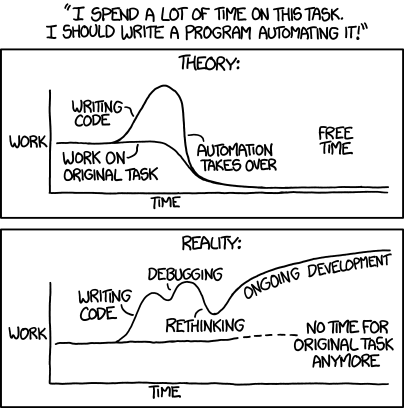

mcpdoc-daemon is a simple tool born from a simple need: to reduce the friction of a common development task. It automates the tedious parts of context management so I can focus on what's important: building things.

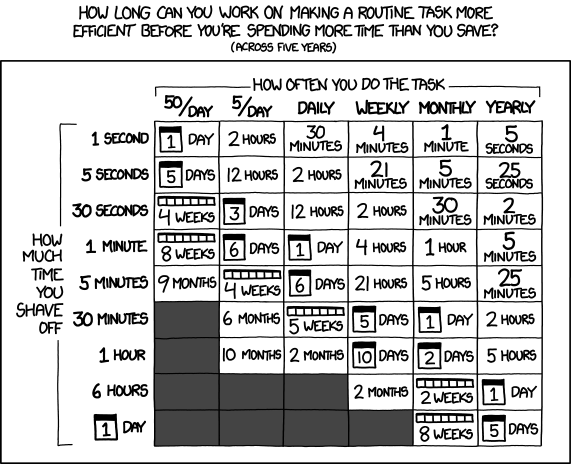

Does it make me more efficient? I have no idea. Do I like having it after spending time making it work, packaged, distributed? Absolutely yes!

You can find the project on GitHub. Contributions, feedback, and ideas are always welcome!