Blueprint for an AI Supercluster: A Reference Architecture for 100,000+ Accelerators

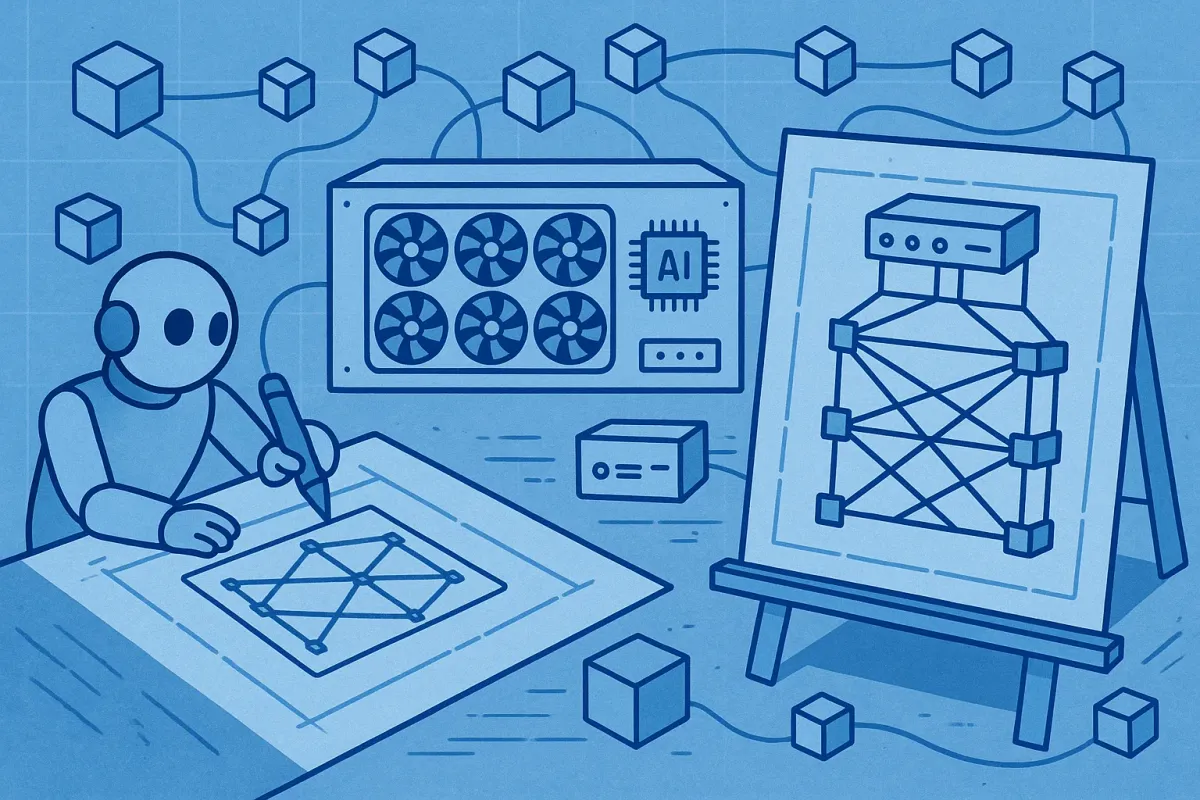

How do you actually build a 100,000-GPU cluster? This blueprint outlines a practical reference architecture: a hybrid model using proprietary links for scale-up and open UEC Ethernet for the massive scale-out, all tied together by the DPU.

Throughout this series, we've dissected the technologies, strategies, and economics shaping the future of AI networking. We've gone from the 30,000-foot view of the AI factory down to the microscopic world of network packets. Now, it's time to put it all back together.

How do you actually build one of these things? What does a practical, forward-looking reference architecture for a massive AI supercluster—one capable of scaling to 100,000 or more accelerators—look like?

This isn't a one-size-fits-all schematic, but a blueprint based on a set of core principles that synthesize everything we've learned. It’s a model designed for massive scale, operational efficiency, and long-term economic sustainability.

The Core Principle: A Hybrid Interconnect Strategy

The single most important principle of modern AI cluster design is that there is no one magic bullet for connectivity. The optimal architecture is a hybrid model that uses the best tool for the right job at different layers of the system.

- Scale-Up (The "Memory Fabric"): This is the communication within a single server node, connecting the 8 GPUs together. Here, you need the absolute highest bandwidth and lowest latency possible. This domain will continue to be dominated by proprietary, memory-semantic interconnects like NVIDIA's NVLink or AMD's Infinity Fabric. Think of this as an extension of the server's memory system, not a traditional network.

- Scale-Out (The "Data Fabric"): This is the network that connects thousands of these individual server nodes together to form the supercluster. This is the domain where open, UEC-based Ethernet will dominate. Using a proprietary interconnect at this massive scale is economically and operationally unsustainable for most. Open Ethernet provides the required combination of performance, multi-vendor choice, and cost-effectiveness.

The DPU is the critical bridge and demarcation point between these two worlds. It sits at the edge of the server, translating the demands of the internal scale-up fabric into the UET protocol for transmission across the massive scale-out fabric.

The Topology: Two-Tier, Rail-Optimized Clos

For the scale-out fabric, the gold-standard topology is a two-tier leaf-spine Clos fabric (also known as a Fat-Tree). In this design, every "leaf" switch (connected to servers) connects to every "spine" switch, creating a highly efficient, non-blocking network where the path between any two servers is short and predictable. Using modern, high-port-count switches, a two-tier design can scale to over 100,000 accelerators while minimizing the number of "hops" and thus keeping latency low.

This topology must be implemented in a "rail-optimized" manner. A typical AI server has 8 GPUs and 8 corresponding network interfaces (or "rails"). In this design, each of those 8 rails is connected to a different leaf switch. This ensures that the traffic leaving the 8 GPUs has 8 distinct, parallel paths into the fabric, maximizing bandwidth and preventing the leaf switch from becoming a bottleneck.

The Hardware and Software Blueprint

- Switches: The leaf and spine switches will be built with high-density, UEC-compliant merchant silicon, like Broadcom's Tomahawk family, packaged into systems by vendors like Arista.

- Endpoints: Every server must be equipped with a DPU or a "SuperNIC"—an advanced network adapter that can offload the entire UET protocol stack, including packet spraying and reordering. These will be UEC-compliant versions of products from NVIDIA, AMD, and Intel.

- Management: A fabric of this scale is impossible to manage manually. It requires a unified, AI-driven software plane like Arista's CloudVision. This provides zero-touch provisioning, automated configuration, and—most importantly—deep, real-time telemetry that feeds AI-powered analytics to predict bottlenecks and diagnose problems before they impact jobs.

Facility Integration: Power and Cooling are Part of the Design

Finally, this blueprint doesn't exist in a vacuum. The extreme power density of AI clusters—with server racks moving from 10kW towards 100kW and beyond—is forcing a convergence of IT and facilities engineering. The network architecture must be designed in concert with:

- Direct-to-Chip Liquid Cooling: Air cooling is no longer sufficient. Coolant must be piped directly to the hot components (GPUs, CPUs, switch ASICs).

- High-Voltage DC Power: To improve efficiency, data centers are moving to higher-voltage DC power distribution directly to the rack, minimizing conversion losses.

The network hardware must be designed from the ground up to integrate with these next-generation power and thermal management systems.