Containing Local MCP Servers

It would seem that Model Context Protocol is the talk of the town these days. With the onset of tools like Anthropic's Claude Code and JetBrain's Junie being able to run local instances MCP Servers are helping to provide relevant context to AI Agents "just in time".

The quality and reliability of MCP Servers available today is rather variable. And it will take some time, as with most software, for things to get better. Today, a lot of the MCP Servers out there are hacked together or vibe coded. This leads me to the first motivation for the approach detailed here - I really do not want to run arbitrary code that can access and/or modify my development environment.

And when you find an MCP Server that you think might be of use and try rather excitedly run the npx or uvx command advertised - boom! The second motivation for this post arises. You either do not have the right combination of node version and/or system dependencies to run the server. Not to mention the number of additional packages that npx wants to install to my global modules.

So, what can we do? Maybe we could simply run them inside a container?

Manually running in containers

Here is a quick example. I use podman as this is my preference, you can easily substitute it with docker if that is what you use. We use LangChain's mcpdoc MCP Server as an example here.

podman run \

--replace \

--name mcp-server-mcpdoc \

--rm -it -p 8082:8082 \

ghcr.io/astral-sh/uv:python3.13-bookworm-slim \

uvx \

--from mcpdoc mcpdoc \

--urls "LangGraph:https://langchain-ai.github.io/langgraph/llms.txt" "LangChain:https://python.langchain.com/llms.txt" \

--transport sse \

--port 8082 \

--host 0.0.0.0npx commands, you can simply replace the image with docker.io/node:lts-slim.-d instead of --rm to start and stop this container to avoid having to download and install dependencies when starting again. You can do the same for the inspector introduced below as well.This now starts a the mcpdoc MCP Server responding to requests on port 8082 on your host. How do you test it out? The team at Anthropic has provided us with a visual testing tool called inspector. You can run the following command to run the web interface.

podman run \

--rm -it --net=host \

docker.io/node:lts-slim npx \

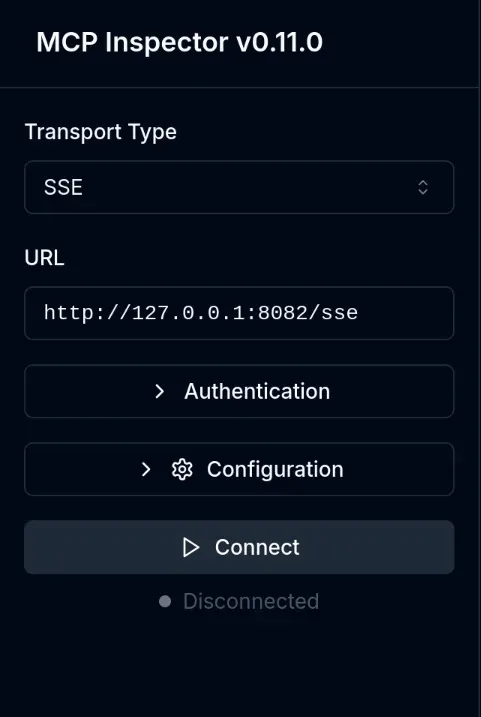

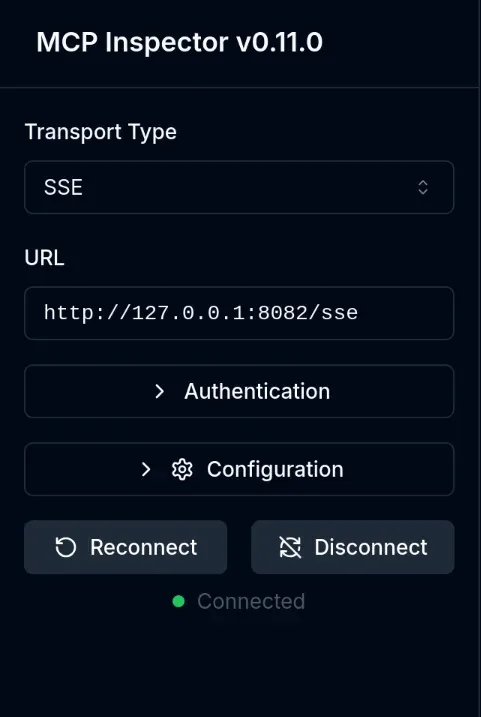

-y @modelcontextprotocol/inspector--net=host here so that the inspector can connect to the published port 8082 from the mcp-server-mcpdoc container. You can alternatively make sure that both containers are on the same network and that inspector can reach the MCP Server container by name.Once this container starts, you can then visit http://127.0.0.1:6274 on your browser. And then select transport type SSE and set URL to http://127.0.0.1:8282 or the port you have published for the MCP Server. If all goes well you will see a green dot indicating "Connected".

SSE transport is deprecated as of 2025.03.26 and replaced with StreamableHTTP instead. Not all MCP Servers have replaced SSE yet or made StreamableHTTP available.

MCP Inspector configuration example.

You can also choose to run the inspector CLI command instead to save you having to use the browser. This example lists all tools available via the MCP Server.

podman run \

--rm -t --net=host \

docker.io/node:lts-slim npx \

-y @modelcontextprotocol/inspector \

--cli http://127.0.0.1:8082 --method tools/listIf this works, you will now see a JSON response like the one below.

{

"tools": [

{

"name": "list_doc_sources",

"description": "List all available documentation sources.\n\nThis is the first tool you should call in the documentation workflow.\nIt provides URLs to llms.txt files or local file paths that the user has made available.\n\nReturns:\n A string containing a formatted list of documentation sources with their URLs or file paths\n",

"inputSchema": {

"type": "object",

"properties": {},

"title": "list_doc_sourcesArguments"

}

},

{

"name": "fetch_docs",

"description": "Fetch and parse documentation from a given URL or local file.\n\nUse this tool after list_doc_sources to:\n1. First fetch the llms.txt file from a documentation source\n2. Analyze the URLs listed in the llms.txt file\n3. Then fetch specific documentation pages relevant to the user's question\n\nArgs:\n url: The URL to fetch documentation from.\n\nReturns:\n The fetched documentation content converted to markdown, or an error message\n if the request fails or the URL is not from an allowed domain.",

"inputSchema": {

"type": "object",

"properties": {

"url": {

"title": "Url",

"type": "string"

}

},

"required": [

"url"

],

"title": "fetch_docsArguments"

}

}

]

}Using containers from MCP Server configuration

You can also simply offload managing these containers to your MCP Client via the MCP Server Configuration property. This is supported by most clients out there like Claude Code, VS Code, JetBrains IDEs etc.

For the mcpdoc example, the configuration might look something like this.

stdio transport here. You can also use sse if you desire, but remember to modify the configuration appropriately.{

"mcpServers": {

"mcpdoc": {

"type": "stdio",

"command": "podman",

"args": [

"run",

"--rm",

"-it",

"ghcr.io/astral-sh/uv:python3.13-bookworm-slim",

"uvx",

"--from",

"mcpdoc",

"mcpdoc",

"--urls",

"LangGraph:https://langchain-ai.github.io/langgraph/llms.txt",

"LangChain:https://python.langchain.com/llms.txt",

"--transport",

"stdio"

]

}

}

}Using a compose file

A natural extension to all this is you wanting to manage a fleet of MCP Servers, using a container compose configuration. Alternatively, you can also consider using podman pods.

With the mcpdoc server and the inspector, this can look something like this.

stdio transport. For those, you will need to use a container image with an MCP proxy.services:

inspector:

image: docker.io/node:lts-slim

command: ["npx", "-y", "@modelcontextprotocol/inspector"]

ports:

- "6274:6274"

restart: unless-stopped

networks:

- mcp-servers

mcpdoc-server:

image: ghcr.io/astral-sh/uv:python3.13-bookworm-slim

command:

- "uvx"

- "--from"

- "mcpdoc"

- "mcpdoc"

- "--urls"

- "LangGraph:https://langchain-ai.github.io/langgraph/llms.txt"

- "LangChain:https://python.langchain.com/llms.txt"

- "--transport"

- "sse"

- "--port"

- "8082"

- "--host"

- "0.0.0.0"

ports:

- "8082:8082"

restart: unless-stopped

networks:

- mcp-servers

networks:

mcp-servers:

name: mcp-servers

driver: bridgeA compose.yaml file managing mcpdoc server and MCP inspector.

You can simply run podman compose up or docker-compose up to start both the mcpdoc and inspector instances.

You can run the inspector CLI command as show here.

podman compose exec -t inspector \

npx -y @modelcontextprotocol/inspector \

--cli http://mcpdoc-server:8082 \

--method tools/list

Notes on the future

With an MCP server running inside a container, you can now rely on the various other tooling and capabilities that podman provides to manage and connect various MCP servers. It opens up opportunities for more complex local setups etc.

The ecosystem around MCP is growing and changing quite rapidly. Between when I first had to wrap podman run command around an npx command and writing this post, a lot has changed. Even Docker Inc. has gotten into the game with their MCP Server Catalog. This is quite useful when a server already has a ready to use image.

Once could simply configure the use of an stdio transport MCP Server like so.

{

"mcpServers": {

"everything": {

"command": "podman",

"args": [

"run",

"-i",

"--rm",

"docker.io/mcp/everything"

]

}

}

}

Everything MCP Server configuration example using Docker Hub catalog.

Looking forward to various ideas around how to build on top of what is discussed in this post to make it easier to use MCP Servers locally.