Deconstructing Digital Minds: A Blueprint for Language Agents

Large Language Models (LLMs) are rapidly changing how we interact with technology. From answering our questions to drafting emails, their capabilities are impressive. But as we push them to do more complex tasks – to act as "agents" that can reason, plan, and interact with the digital or even physical world – we've entered a bit of a Wild West. Many different approaches are emerging, each with its own terminology and design, making it hard to compare them or build systematically on previous work.

What if we had a common blueprint for these thinking machines? And what if that blueprint wasn't entirely new, but built on decades of wisdom?

The paper titled "Cognitive Architectures for Language Agents" by Theodore R. Sumers, Shunyu Yao, Karthik Narasimhan, and Thomas L. Griffiths from Princeton University offers just that. It introduces a framework called CoALA (Cognitive Architectures for Language Agents). Interestingly, the authors don't present CoALA as a bolt from the blue. Instead, they consciously draw inspiration from the rich history of cognitive science and symbolic artificial intelligence – fields that have long grappled with how to build machines that think.

A Look Back: The Quest for Thinking Machines

The dream of creating artificial intelligence isn't new. Long before the current LLM boom, researchers were exploring how to model human thought.

- They developed "production systems" – essentially sets of "if-then" rules that a system could use to make decisions or solve problems. Think of these as early attempts to formalize reasoning, step by step.

- Building on this, "cognitive architectures" emerged. These were ambitious blueprints for creating artificial minds, specifying components like memory systems, perceptual processes, and decision-making mechanisms. Architectures like Soar and ACT-R were designed to simulate human problem-solving and learning in a structured way.

These early systems were powerful in their own right but often required painstaking effort to define all the rules and knowledge. LLMs, trained on vast amounts of text, offer a new kind of power – a flexible, data-driven understanding of language and, to some extent, the world.

CoALA: Bridging Past and Present

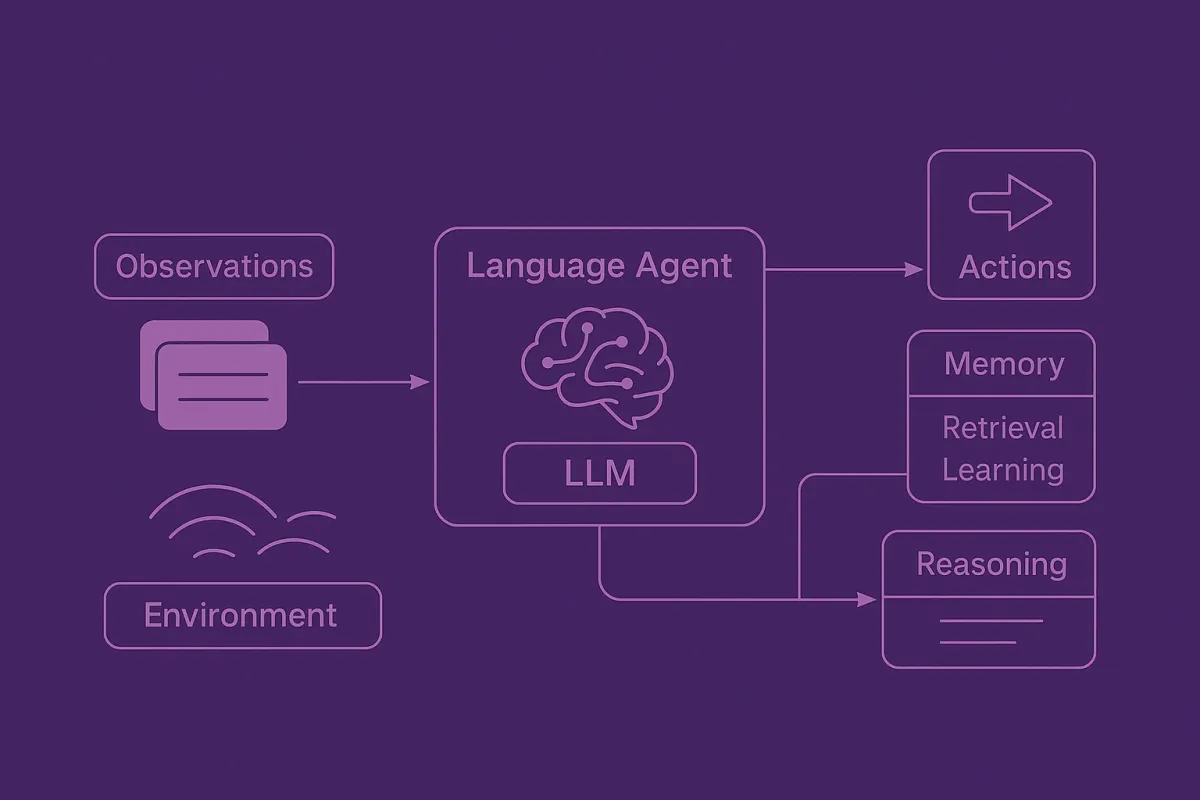

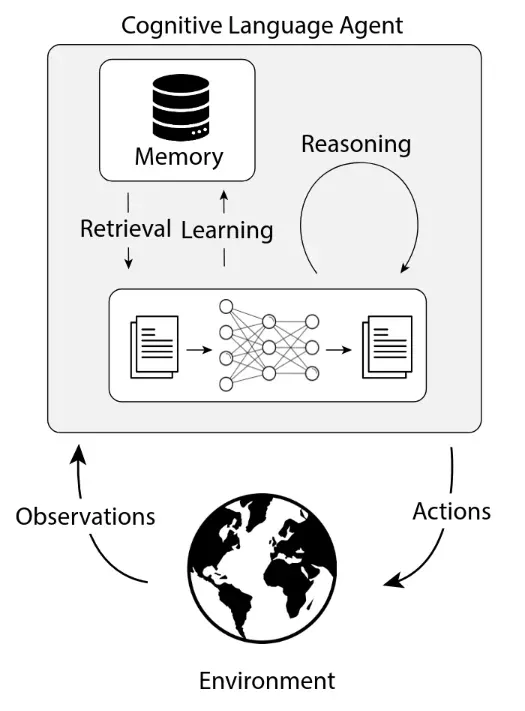

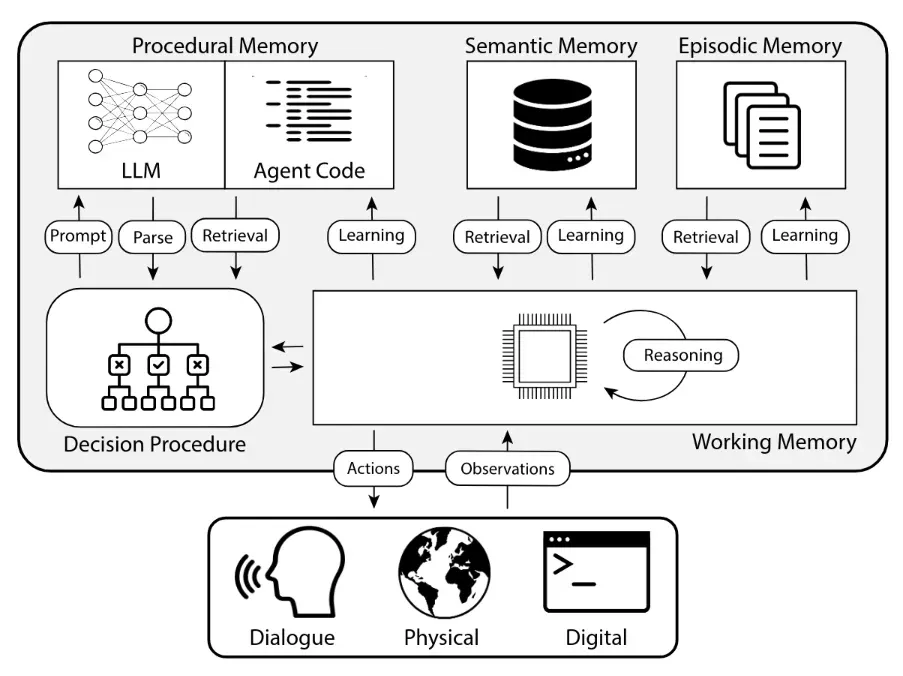

The CoALA framework aims to combine the best of both worlds. It takes the structured, component-based thinking from classic cognitive architectures and applies it to the new capabilities offered by LLMs. It proposes a way to describe any language agent based on three key aspects:

- How they remember (Modular Memory): Just like classic AI models (and us!), intelligent agents need different kinds of memory. CoALA suggests:

- Working Memory: The agent's "RAM" for current tasks.

- Long-Term Memory: Including Episodic Memory (past experiences), Semantic Memory (facts), and Procedural Memory (how-to knowledge, both in LLM weights and agent code).

- What they can do (Structured Actions): Agents interact internally and externally. CoALA distinguishes:

- External Actions (Grounding): Connecting to the outside world (APIs, robotics, user chat).

- Internal Actions: Managing internal state (Retrieval from memory, LLM-powered Reasoning, and Learning by updating memories).

- How they decide (Generalized Decision-Making): An agent's decision process is a cycle:

- Planning: Considering actions, evaluating them (perhaps with LLM help), and selecting one.

- Execution: Carrying out the action, observing, and repeating.

By providing this structured way of looking at language agents — one that acknowledges its historical roots — the CoALA framework doesn't just help researchers and developers organize existing work. It also shines a light on under-explored areas and offers a clearer path toward building more sophisticated and capable agents. It’s a call to move beyond ad-hoc designs and towards a more principled approach, leveraging lessons learned over decades of AI research.

The paper suggests that by thinking in terms of these cognitive components, grounded in a rich history, we can better understand the strengths and weaknesses of different agent designs. Ultimately, it’s about building more robust, transparent, and truly intelligent systems – a goal AI researchers have pursued for generations, now supercharged by the power of LLMs.