Meet the DPU: The "Third Pillar" of Compute That Makes AI Clouds Possible

Your multi-million dollar GPUs are wasting time on chores. The DPU is the third pillar of compute, a specialized processor that offloads infrastructure tasks, freeing up your CPU/GPU and enabling the entire business model of the secure AI cloud.

So far in our series, we've focused on the massive data highways of the AI factory—the network fabric. We've seen the battle between the proprietary king, InfiniBand, and the revolutionary open standard, Ultra Ethernet. But to truly understand the modern AI data center, we need to zoom in from the highway to the on-ramp. We need to talk about the unsung hero of the server, the traffic cop of the AI era.

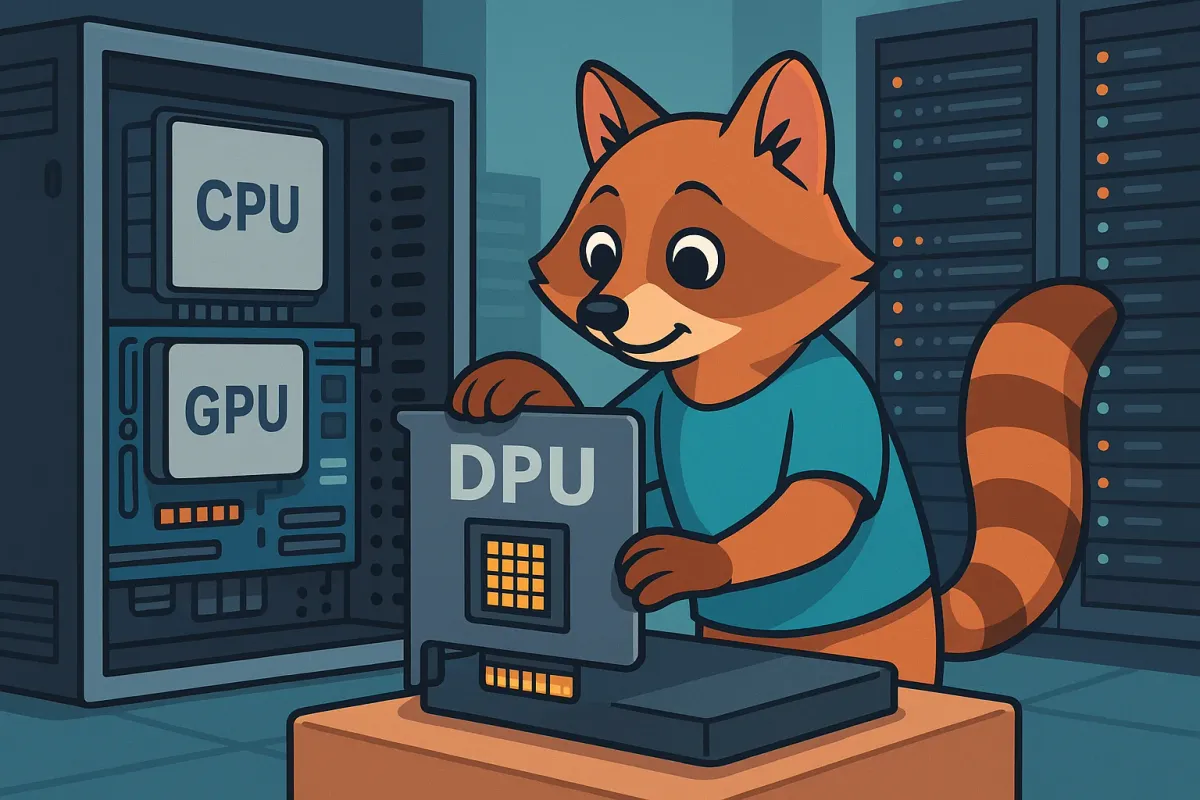

For decades, we’ve thought of a computer as having two brains: the CPU (the general-purpose workhorse) and the GPU (the parallel-processing specialist). But as AI factories have scaled to astronomical size, a new "third pillar" of compute has emerged, one that is no longer optional but has become an absolute necessity.

Meet the DPU (Data Processing Unit), or as Intel calls it, the IPU (Infrastructure Processing Unit). This advanced piece of silicon, often living on the network card, is the secret ingredient that makes massive, efficient, and secure AI clouds possible.

The Problem: Your GPU is Wasting Time

Imagine you've spent millions of dollars on a fleet of the latest and greatest GPUs. Their one and only job is to perform trillions of calculations per second to train your AI model. Every clock cycle they spend waiting for data is money down the drain. The goal is 100% utilization.

In a traditional server, the powerful host CPU is responsible for running the main application, but it's also burdened with a long list of infrastructure "chores":

- Networking: Managing the TCP/IP stack, running the virtual switch.

- Storage: Handling protocols to access data from fast network storage.

- Security: Encrypting data, running firewalls.

Forcing the CPU to do these chores is a huge performance bottleneck. It's like asking your star quarterback to also manage the stadium's plumbing and security. The CPU cycles spent on infrastructure are cycles not spent preparing data and feeding the priceless GPUs.

The Solution: Offload Everything

The DPU is designed to solve this problem by offloading the entire infrastructure stack from the host CPU. A DPU is not just a network card; it's a complete computer-on-a-chip, containing:

- A set of powerful, programmable CPU cores (usually ARM-based).

- A high-speed network interface capable of handling 400Gb/s or more.

- A suite of dedicated hardware accelerators for networking, storage, and security tasks.

By moving all the infrastructure chores onto the DPU, the host CPU is liberated to do one thing and one thing only: serve the application and keep the GPUs fed. This offload provides a direct and massive performance boost.

The Real Superpower: Building the Secure AI Cloud

But performance is only half the story. The DPU's most critical role is creating a hard isolation boundary between the customer's world and the cloud provider's world.

Think about it. When you rent a virtual machine in the cloud, you are sharing the same physical server with other customers (tenants). You need an absolute guarantee that they can't see your data and you can't see theirs.

The DPU makes this possible. The tenant's application runs on the host CPU and GPU. The cloud provider's infrastructure—the management, security, and monitoring software—runs entirely on the DPU. The DPU acts as a secure airlock between the two domains.

This secure, multi-tenant isolation is the fundamental architectural requirement for building public "AI-as-a-Service" clouds. It's what allows a cloud provider to securely rent out slices of its massive AI factory to thousands of different customers. The DPU is the linchpin of this entire business model.

The debate is no longer if you need a DPU, but which DPU ecosystem you'll choose. The main players—NVIDIA's BlueField, AMD's Pensando, and Intel's IPUs—are all racing to become the indispensable third pillar in every AI server.