The Unseen Revolution: Why Small Models could be the True Engine of Agentic AI

Agentic AI is transforming the field, enabling fast, efficient, and affordable automation far beyond chatbots through small, specialized models. These Small Language Models (SLMs) can outperform large models in real-world tasks, powering the next generation of enterprise and on-device AI.

Beyond the Chatbot – The Dawn of the Agentic Era

The conversation around artificial intelligence is evolving. For years, the public imagination has been captured by generative AI—systems capable of creating text, images, and code in response to human prompts. Yet, a more profound transformation is underway, moving beyond mere content generation to autonomous action. This is the dawn of the agentic era, a paradigm shift toward AI systems that do not just respond, but act. Imagine an AI that doesn't just draft an email about a supply chain disruption but autonomously analyzes real-time shipping data, reroutes deliveries, notifies affected customers, and updates inventory levels—all without direct human intervention.

This post is centered around the academic paper Small Language Models are the Future of Agentic AI. This is an opinionated take on how the evolution of SMLs challenge current Agentic AI architectures.

Agentic AI refers to a class of autonomous systems that can perceive their environment, reason, set goals, make decisions, and execute complex, multi-step tasks with limited human supervision. Unlike traditional rule-based AI, which follows a rigid script, or generative AI, which creates content based on learned patterns, agentic systems possess agency—the capacity to act independently and purposefully to achieve a predefined objective. This capability is not a distant fantasy; it represents the next major frontier in enterprise software. Market analysis firm Gartner predicts that by 2027, a third of all enterprise software solutions will incorporate agentic AI, which will autonomously handle up to 15% of day-to-day business decisions.

The prevailing architectural choice for building these sophisticated agents has been to rely on the largest, most powerful Large Language Models (LLMs) available, such as OpenAI's GPT-4 or Anthropic's Claude 3.5 Sonnet, to serve as the central "brain". The logic seems intuitive: a more powerful, generalist model should be better equipped to handle the complexities of autonomous reasoning. However, this monolithic, LLM-centric approach has created a significant, often unspoken, bottleneck. The immense computational and financial costs associated with running these massive models for every minor decision create what can be described as a "generative AI paradox": the potential for automation is vast, but the practical, scalable deployment is often economically prohibitive. Using a trillion-parameter model to perform a simple, repetitive task like extracting a customer ID from an email is akin to using a Formula One race car for a trip to the grocery store—an impressive display of power, but profoundly inefficient and impractical for everyday use.

This raises a critical question for the future of the field: Is there a smarter, more efficient, and ultimately more scalable path to building the autonomous systems of tomorrow? The answer, according to a growing body of research and industry practice, is a resounding yes—and it lies not in scaling up, but in strategically scaling down.

The Great Downsizing: A New Paper Argues Smaller is Smarter

A pivotal academic paper, "Small Language Models are the Future of Agentic AI" (arXiv:2506.02153), formally challenges the "bigger is better" orthodoxy that has dominated the AI landscape. The authors present a compelling, evidence-based case that Small Language Models (SLMs)—typically defined as models with fewer than 10 billion parameters—are not just a viable alternative but are fundamentally better suited for the vast majority of tasks within an agentic system. The paper's thesis rests on three foundational arguments.

Argument 1: SLMs Are Now "Good Enough"

The first and most crucial point is that the performance gap between SLMs and their larger counterparts is rapidly closing for many agentic capabilities. Recent advancements in model architecture and training techniques have produced a new generation of SLMs that are surprisingly powerful, often matching or even exceeding the performance of much larger models from previous generations on core tasks like reasoning, instruction following, and tool use.

The paper provides concrete examples to substantiate this claim. Microsoft's Phi-3 Small, a 7-billion-parameter model, achieves language understanding and commonsense reasoning scores on par with 70-billion-parameter models of the same generation. Similarly, NVIDIA's Hymba-1.5B, a hybrid-architecture model, outperforms 13-billion-parameter models on instruction-following tasks. Perhaps most strikingly, Salesforce's xLAM-2-8B model has demonstrated state-of-the-art performance in tool calling, surpassing even frontier models like GPT-4o and Claude 3.5. This evidence indicates that raw parameter count is no longer the sole determinant of capability; efficient design and specialized training are proving to be just as, if not more, important.

Argument 2: Agentic Tasks are Inherently Specialized

The paper's second key insight is a fundamental re-characterization of the work that language models perform within agentic systems. Unlike open-ended human-AI conversations, the internal operations of an AI agent are composed of highly structured, repetitive, and narrowly-scoped sub-tasks. These are not requests for creative prose or complex philosophical debate; they are functional commands like "Classify this support ticket," "Extract the invoice number from this document," or "Call the weather API for zip code 90210".

For these deterministic tasks, the vast, generalized knowledge of an LLM is largely wasted overhead. An SLM, on the other hand, can be fine-tuned on a specific domain or task, achieving high precision and reliability without the computational baggage of a generalist model. This makes them a perfect match for the functional, non-conversational "internal monologue" of an AI agent.

Argument 3: The Economics are Undeniable

The final argument is a pragmatic one based on economics and performance. For the specialized tasks that constitute the bulk of an agent's workload, SLMs offer staggering advantages in efficiency.

- Cost: SLMs can be anywhere from 10 to 100 times cheaper to run per token than flagship LLMs. For an agentic system that may make thousands of internal model calls to complete a single user request, this difference transforms the total cost of ownership from prohibitively expensive to economically viable.

- Speed: With fewer parameters to process, SLMs deliver inference speeds that are 2 to 5 times faster than LLMs. This reduction in latency is critical for any application requiring real-time interaction, such as customer service bots or on-device assistants.

- Efficiency: SLMs require significantly less energy to run and can be deployed on consumer-grade hardware, such as a local GPU, rather than requiring massive, centralized cloud infrastructure. This not only reduces operational costs but also opens the door to new deployment paradigms.

The following table provides a clear, at-a-glance comparison of these trade-offs, making the economic case for an SLM-first approach to agentic AI immediately apparent.

| Feature | Large Language Model (LLM) | Small Language Model (SLM) |

|---|---|---|

| Parameter Count | 100B - 1.7T+ | < 10B (often 1-7B) |

| Cost per Million Tokens | High (e.g., $5 - $15 for input on premium models) | Low to Very Low (often < $0.50, can be near-zero if self-hosted) |

| Inference Latency | High; often unsuitable for real-time interactive loops | Low; 2-5x faster, enabling real-time responsiveness |

| Ideal Use Case | Complex reasoning, open-domain conversation, strategic planning | Specialized, repetitive, and structured tasks (e.g., classification, data extraction, API calls) |

| Deployment Environment | Primarily large-scale cloud resources | Cloud, on-premises, and edge/on-device (smartphones, laptops) |

| Fine-Tuning Agility | Slow and expensive, requires massive datasets and compute | Fast and cheap, can be fine-tuned on consumer-grade GPUs in hours |

The paper goes beyond simply advocating for SLMs; it proposes a practical migration path called the "LLM-to-SLM conversion algorithm". This strategy reveals a powerful feedback loop. An organization can begin by deploying an agent powered by a generalist LLM. By securely logging all the internal prompts, tool calls, and responses, the system gathers a rich, high-quality dataset that perfectly captures the specific, repetitive sub-tasks the agent performs most frequently. This logged data can then be used to fine-tune a much smaller, more efficient SLM to take over these high-volume tasks. The expensive LLM is then reserved only for the complex, "long-tail" requests that truly require its power. In this model, the initial investment in a costly LLM effectively pays for the creation of its own more efficient replacement, creating a data flywheel that continuously optimizes the system for both performance and cost over time.

Empirical Benchmarking: SLMs vs. LLMs in Agentic Tasks

While theoretical arguments and case studies illustrate the potential of Small Language Models (SLMs), recent benchmark data provides hard evidence for their growing viability—and even superiority—on a range of real-world agentic tasks.

The following table summarizes key empirical metrics drawn from leading SLMs and LLMs, highlighting not just general performance, but practical cost, speed, and hardware factors:

| Metric | Small Language Models (SLMs) | Large Language Models (LLMs) |

|---|---|---|

| Typical Parameters | 100M – 10B | 70B – 1T+ |

| Reasoning Benchmark (MMLU, Phi-3 Small) | 69% | 70–71% (GPT-3.5) |

| Tool Use & Function Calling | xLAM-2-8B and hybrids match or beat GPT-4 | GPT-4, Claude 3.5 |

| Inference Speed | 2–5× faster | Significantly slower (cloud scale) |

| Cost per Million Tokens | $0.05–$0.50 (self-hosted) | $5–$15 (cloud API) |

| Energy Consumption | Up to 90% less than LLMs | Much higher (specialized servers) |

| Deployment | Edge, local, and cloud | Primarily cloud/datacenter |

| Fine-tuning Time | Hours (laptop or consumer GPU) | Days/weeks (cluster-scale) |

| Use Case Specialization | High (tasks, APIs, doc processing) | Broad open-ended/generalist |

- Phi-3 Small (7B): Matches 70B-scale models on reasoning; MT-bench: 8.7.

- Salesforce xLAM-2-8B: Excels in JSON/tool-calling, state-of-the-art on agentic sub-tasks.

- Operational Cost: SLMs, when self-hosted, cut runtime expenses drastically.

- Real-world Adoption: Enterprises like Clearwater Analytics report lower costs, greater agility after switching to SLM-driven architectures.

These results demonstrate that SLMs, when fine-tuned for targeted roles, not only keep pace with but—on efficiency, speed, and cost—often leap ahead of much larger generalist counterparts. For the specialized, repetitive workloads of agentic systems, small truly is mighty.

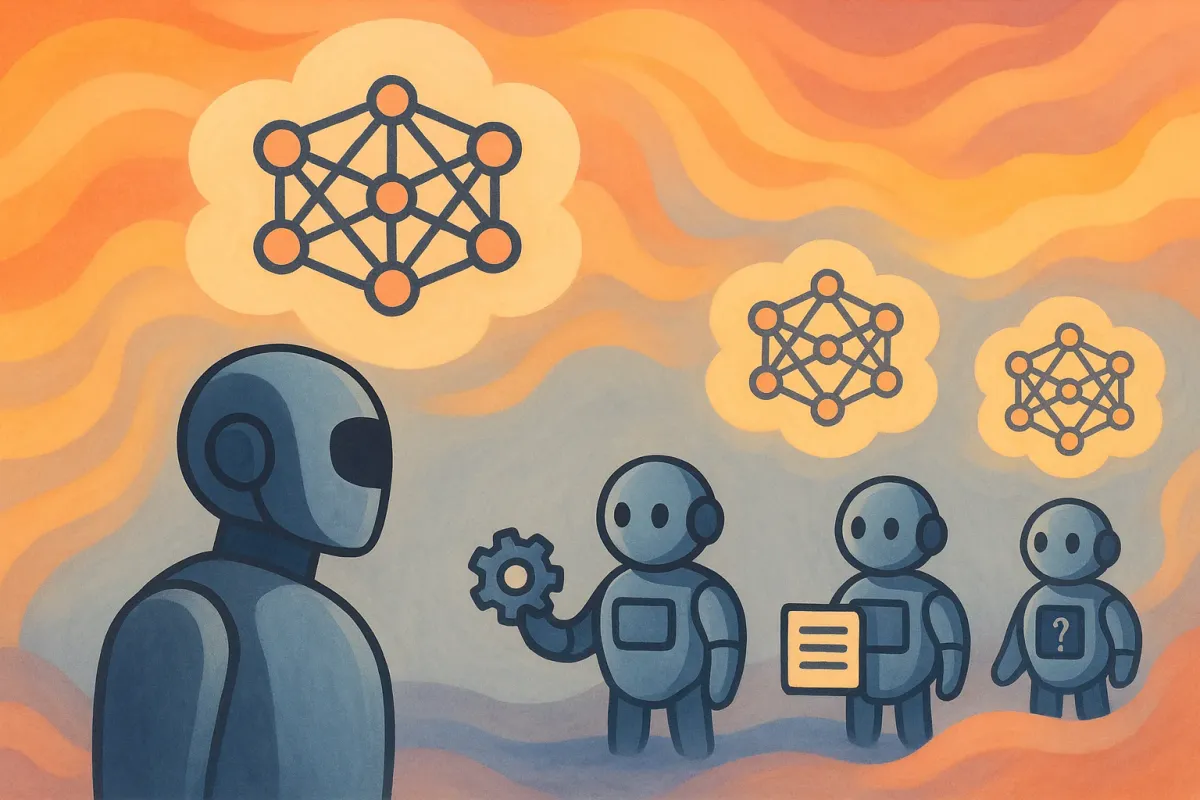

The New Blueprint: Building an AI "Team" with SLMs

The solution to the LLM efficiency problem is not a simple one-for-one replacement. Instead, it involves a fundamental architectural shift away from monolithic systems and toward heterogeneous, multi-agent systems. This new blueprint reimagines an AI application not as a single, all-knowing brain but as a collaborative team of digital specialists, each optimized for a specific role.

The "Team of Specialists" Architecture

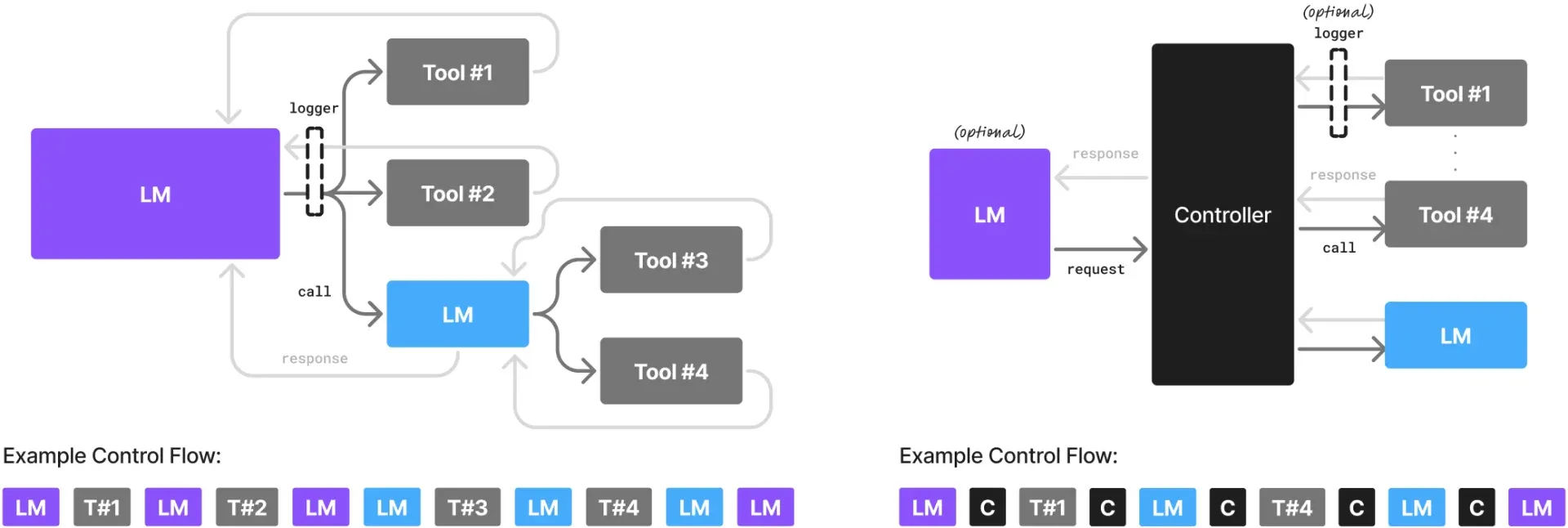

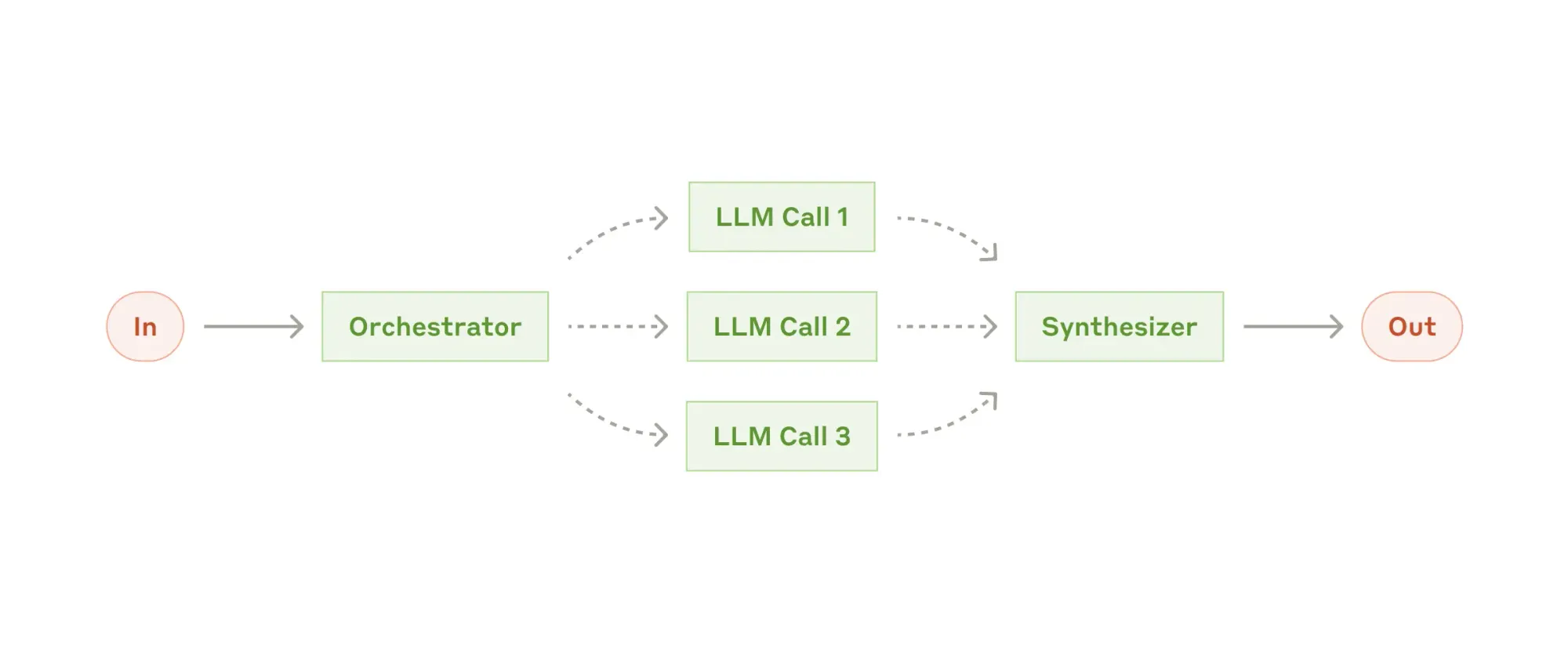

In this model, a complex user goal is broken down into a series of smaller, manageable sub-tasks. Each sub-task is then assigned to a specialized agent best suited for the job. This approach is typically orchestrated using one of two primary design patterns:

- The Supervisor/Worker Model: This hierarchical structure functions like a well-run project team. A high-level "supervisor" or "orchestrator" agent, often powered by a capable LLM for its strong reasoning and planning abilities, receives the initial goal. It analyzes the request, develops a step-by-step plan, and then delegates each step to a subordinate "worker" agent. These worker agents are typically powered by fine-tuned, highly efficient SLMs, each an expert in its narrow domain—one for retrieving data, another for summarizing text, a third for calling an external API, and so on.

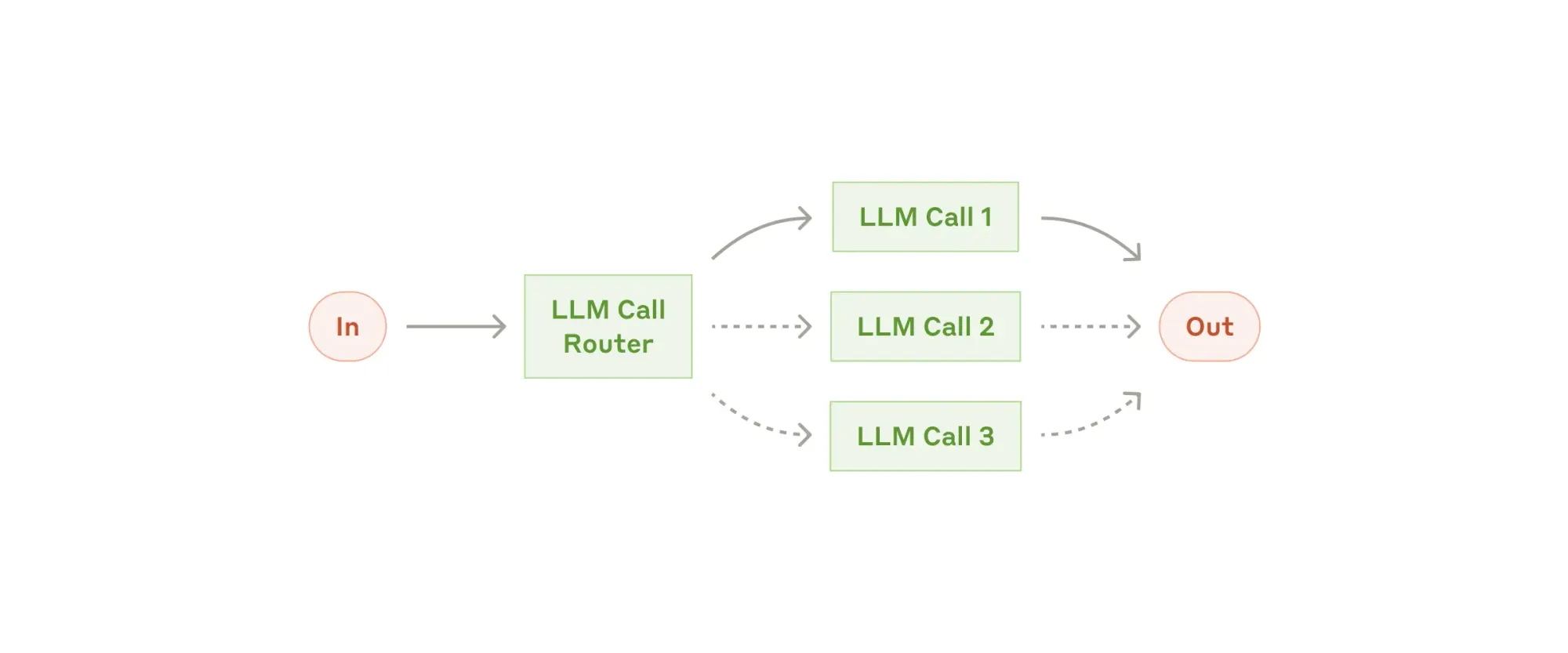

- The Router Model: A slightly simpler but effective pattern involves a "router" agent that acts as a smart switchboard. It receives an incoming query, uses a language model (often a fast SLM) to classify its intent, and then routes it to the appropriate downstream agent or workflow. For example, a customer service query identified as a "billing issue" would be sent to the billing agent, while a "technical problem" would be routed to the tech support agent.

Image Credits: Anthropic

This modular, "Lego-like" composition makes agentic systems more robust, scalable, and maintainable. If a particular specialist agent is underperforming or a new capability is needed, that single component can be fine-tuned or replaced without disrupting the entire system, drastically simplifying development and iteration cycles.

Case Study Spotlight: Clearwater Analytics in Production

This architectural theory is already being proven in high-stakes production environments. Clearwater Analytics, a leading SaaS provider for investment management, has successfully built and deployed a customer-facing, multi-agent system powered by fine-tuned SLMs. Their system, which they have discussed in technical presentations, is composed of a team of "digital specialists" that are aware of specific knowledge domains, applications, and data sources. By orchestrating these specialized agents, they can automate complex financial analytics and reporting tasks, delivering value to their customers at scale. This real-world implementation serves as a powerful validation of the paper's thesis, demonstrating that the multi-agent, SLM-first approach is not just academically interesting but commercially viable and effective.

Clearwater Analytics has publicly shared their pioneering adoption of multi-agent systems powered by fine-tuned SLMs. These real-world deployments, discussed in technical articles and webinars, validate the viability of a multi-agent, SLM-first approach for automating complex workflows in financial analytics, emphasizing robust security and specialized agents.

The following table illustrates how such a team of AI agents might be assembled for a common business workflow, highlighting the specialized roles that SLMs are perfectly suited to fill.

| Agent Role | Primary Task | Why an SLM is a Good Fit | Example Framework/Model |

|---|---|---|---|

| Orchestrator/Router | Decomposes user goals into sub-tasks; routes queries to the correct specialist agent. | For simple routing, a fast SLM is sufficient. For complex planning, an LLM may be used. | LangGraph for state management; GPT-4o for planning. |

| Data Retrieval Agent | Executes queries against a database (e.g., SQL) or vector store to fetch relevant information. | The task is highly structured and requires low latency for a responsive user experience. | Fine-tuned Phi-3 or Llama 3 8B on SQL generation. |

| Summarization Agent | Condenses long documents or retrieved data into concise summaries. | This is a narrow, repetitive task where a specialized model can outperform a generalist. | Fine-tuned Mistral 7B on company-specific report formats. |

| API Calling Agent | Formats requests and interacts with external tools and APIs (e.g., Salesforce, Slack). | Requires high precision in generating structured output (JSON) and strict adherence to formats. | Salesforce xLAM-2-8B, which excels at tool use. |

| Critique/Validation Agent | Reviews the output of other agents for factual accuracy, compliance with policies, or quality. | The task is often rule-based and deterministic, requiring reliability over creativity. | A fine-tuned SLM trained on examples of "good" vs. "bad" outputs. |

This architectural paradigm reveals that the relationship between LLMs and SLMs is not one of competition but of symbiosis. The rise of agentic AI is redefining the roles of these models within a larger system. LLMs are evolving into high-level strategic planners and orchestrators, valued for their broad reasoning capabilities. Simultaneously, the market for highly specialized, efficient SLMs is exploding, as they become the indispensable execution layer—the reliable "workers" that carry out the plan. This creates two distinct but deeply interconnected value chains in the AI economy, where the advancement of one type of model drives the demand and development of the other.

From Theory to Practice: The Tools and Platforms Building Tomorrow's Agents

This architectural evolution from monolithic models to collaborative agent teams is not just a theoretical exercise; it is being actively enabled by a rapidly maturing ecosystem of developer tools and enterprise platforms. These frameworks provide the essential plumbing—the orchestration logic, state management, and communication protocols—needed to build and deploy sophisticated multi-agent systems.

The Open-Source Developer's Toolkit

For developers building custom agentic applications, a vibrant open-source community has produced several powerful frameworks:

- LangChain: Often described as the "Lego set" for AI applications, LangChain provides a vast library of modular components for chaining together language model calls with external data sources and tools. Its recent evolution, particularly with the introduction of LangGraph, has shifted its focus toward supporting more complex, stateful, and cyclical multi-agent workflows, allowing developers to define explicit graphs of interaction between agents.

- Pydantic AI: Built by the team behind the ubiquitous Pydantic data validation library, Pydantic AI is a Python agent framework designed to bring reliability and structure to generative AI applications. Its core strength is ensuring that the inputs and outputs of language models are type-safe and conform to a predefined schema, which is critical for building predictable and robust agents. It supports complex, multi-agent workflows through patterns like agent delegation and graph-based control flows, allowing developers to build sophisticated systems using familiar Pythonic design.

- Microsoft AutoGen: This framework is designed from the ground up for creating multi-agent conversational systems. Its core philosophy is that complex problems can be solved through conversation between multiple specialized agents, which can include both AI and human participants. Its event-driven architecture and support for diverse conversation patterns make it a powerful tool for building collaborative agentic workflows.

- CrewAI: A fast-growing and popular framework, CrewAI focuses on an intuitive, role-based approach to agent orchestration. Developers define agents with specific roles (e.g., "researcher," "writer"), assign them tasks, and assemble them into a "crew" that works together to achieve a common goal. Its emphasis on simplicity and clear collaborative dynamics has made it a favorite for rapid development.

Enterprise-Grade Agentic Platforms

Recognizing the immense business value of agentic automation, the major cloud providers are racing to build enterprise-grade platforms that abstract away the underlying complexity of orchestrating heterogeneous models. These platforms provide managed services, visual builders, and pre-built integrations to accelerate deployment.

- Amazon Bedrock: AWS's platform for generative AI explicitly supports multi-agent collaboration. It allows developers to build a supervisor agent that can coordinate the actions of multiple specialized agents, leveraging a wide range of models available through the Bedrock service.

- Google's Agentspace and Agent Dev Kit (ADK): Google is investing heavily in a unified agent development ecosystem. Agentspace and the ADK provide a modular, component-based architecture for creating hierarchical agent compositions that are deeply integrated with the Google Cloud platform, including Vertex AI and Gemini models.

- Microsoft Copilot Studio and Azure AI: Microsoft is embedding agentic capabilities across its entire software stack. Copilot Studio allows businesses to build and customize their own agents that can integrate with Microsoft 365 and other enterprise systems, managed through the Azure AI platform.

- Other Industry Players: The market is broad and growing, with companies like Databricks offering Agent Bricks for building data-centric agents and platforms like Dataiku providing tools for creating and governing AI agents at scale.

The rapid proliferation of these frameworks and platforms signals a classic technology adoption cycle. We are witnessing a race to create the dominant "operating system" for agentic AI. As the underlying language models, both large and small, become increasingly capable and commoditized, the strategic battleground is shifting. The key differentiator is no longer simply "who has the most powerful model," but rather "who provides the best platform for orchestrating, evaluating, and governing any model for a given task." The true value is moving up the stack, from raw intelligence to the sophisticated orchestration layer that can dynamically route tasks to the most efficient and cost-effective model for the job, whether it's a massive LLM from one provider or a specialized SLM from another.

The Next Frontier: Your Personal AI Agent, On Your Own Device

Perhaps the most profound implication of the shift toward smaller, more efficient models is the opportunity to move AI from the centralized cloud to the edge. Because SLMs are lightweight enough to run on consumer-grade hardware, they can be deployed directly on the devices we use every day: our smartphones, laptops, cars, and smart home appliances. This unlocks a new frontier for agentic AI, with transformative benefits for users and developers alike.

The Transformative Benefits of On-Device AI

Moving agentic processing from the cloud to the device addresses some of the most significant barriers to widespread AI adoption:

- Privacy and Security: This is a paramount advantage. When an agent runs locally, sensitive personal or corporate data—such as private emails, financial documents, or health information—never needs to be sent to a third-party server. This is a game-changer for privacy-conscious consumers and essential for compliance in regulated industries like healthcare and finance.

- Latency and Real-Time Responsiveness: By eliminating the round-trip network delay to a cloud server, on-device agents can respond instantaneously. This is critical for creating seamless user experiences in applications like real-time voice assistants, interactive video game characters, or factory-floor robotics where split-second timing matters.

- Offline Functionality: On-device agents can continue to function reliably even without an internet connection. This makes them far more robust and useful in a variety of real-world scenarios, from navigating in a remote area to working on an airplane.

- Cost: For the end-user and developer, on-device processing removes the recurring per-token API costs associated with cloud-based models. This makes it economically feasible to build applications that perform a high frequency of agentic tasks, which would be prohibitively expensive using a cloud-only approach.

The Democratization of AI Innovation

The rise of powerful, open-source SLMs, combined with the feasibility of on-device deployment, dramatically lowers the barrier to entry for building sophisticated AI applications. Startups, independent developers, and academic researchers no longer need access to massive capital or vast cloud computing resources to innovate at the cutting edge. This democratization is poised to trigger a Cambrian explosion of new, niche agentic applications, tailored to specific user needs and workflows that larger corporations might overlook.

This trend is forcing a fundamental rethinking of application architecture. The future is not a binary choice between "cloud-only" or "edge-only." Instead, the emerging paradigm is a hybrid-edge model. In this architecture, a lightweight, always-on SLM agent resides on the user's device, handling the majority (~80%) of common, everyday tasks locally and privately. When a task arises that requires the vast world knowledge or complex, multi-step reasoning that only a frontier model can provide (e.g., "Plan a detailed, two-week vacation to Japan during cherry blossom season, optimizing for travel time and budget"), the on-device agent intelligently escalates the request to a powerful cloud-based LLM. Apple's recent AI strategy, which combines on-device models for most tasks with a "Private Cloud Compute" fallback for more complex queries, is a prime example of this hybrid architecture in action. This dual-brained approach offers the best of both worlds: the privacy and speed of the edge with the power and knowledge of the cloud.

Conclusion: It's Not About Size, It's About Specialization

The discourse surrounding artificial intelligence is undergoing a critical maturation. The initial, brute-force obsession with scale—the belief that bigger models are always better—is giving way to a more nuanced and pragmatic understanding of efficiency, specialization, and architectural intelligence. The future of agentic AI, as argued by leading researchers and demonstrated by pioneering companies, is not a single, monolithic AGI. Rather, it is a dynamic and collaborative ecosystem of specialized agents, working in concert like a highly effective team of human experts.

This SLM-powered, multi-agent paradigm offers a clear and compelling path forward. It is a blueprint for building AI systems that are not only more powerful but also more practical. By assigning the right task to the right model, this approach delivers solutions that are vastly more cost-effective, faster, more scalable, and more private than their LLM-only predecessors.

This architectural shift will do more than just improve existing applications; it will accelerate the transition of AI from a novel curiosity into a ubiquitous and invisible utility. By making autonomous agents economically viable and deployable everywhere—from the enterprise data center to the smartphone in your pocket—the small model revolution is poised to finally unlock the true promise of the agentic era, fundamentally reshaping how we work, create, and interact with the digital world.